Insights

Helping not-for-profit organisations develop and sustain

the highest levels of employee and volunteer engagement

Insights

Once you’ve collected your data, there are a lot of ways you could analyse your survey. Our approach – which we would recommend to you – is to share as much as you can, as simply as you can.

This blog aims to help you get the basics right when analysing your engagement survey results. If you and your team are ready to take the analysis one step further, keep an eye out for our future blog ‘Going deeper with survey data: Intersectionality, Correlation, and Regression’.

Sometimes you will be operating with a tight budget or a quick turnaround, and an internal analysis of your survey results might be all that’s in scope. The good news is that you can do all the essentials in Microsoft Excel, with straightforward formulae.

Find out more about Excel’s essential functions in this GoSkills article, or Microsoft’s PowerBI dashboard in this SimpliLearn tutorial.

In this blog, I’ll be illustrating the survey analysis with our platform, Reflections – which does all the hard work for you.

Identify the proportion (%) of respondents who chose each option: The proportion is best because it means you can compare results between groups of different sizes.

Avoid using decimals: Whole numbers are faster to read and easier to compare.

Group the results into a single figure: Simplify the results by giving a key figure of overall positivity, by combining Strongly Agree + Agree (or the equivalent if you’ve used a different survey, e.g. Very Likely + Likely)

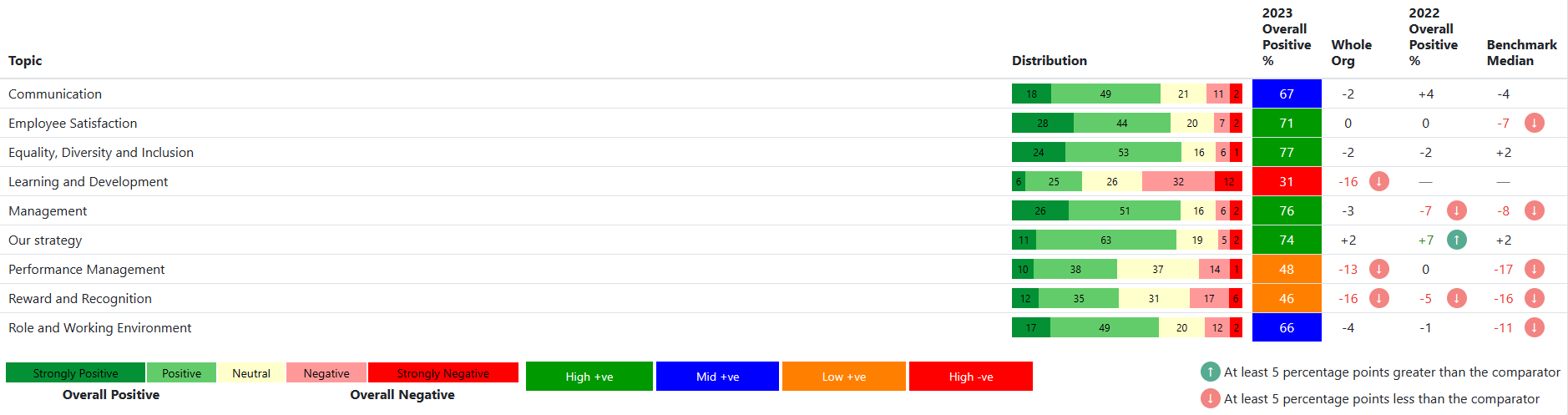

Consider using a traffic-light system or colour coding based on the overall positive, to indicate where results are good and where they could use improvement.

Benchmark your results with +/- figures: Show how results have changed since the previous survey, or how they compare to an external benchmark, with a +/- figure based on the overall positive. Colour-code this too.

Don’t over complicate things! Some organisations we’ve worked with ask if they should use weighted averages, i.e, adjust the scores for male and female respondents to reflect the gender ratio in their organisation. Our advice is always keep it simple – if you start changing the data, so that it no longer reflects the real views shared by your employees, then you won’t be able to make such strong claims about your evidence, and you are likely to confuse your colleagues.

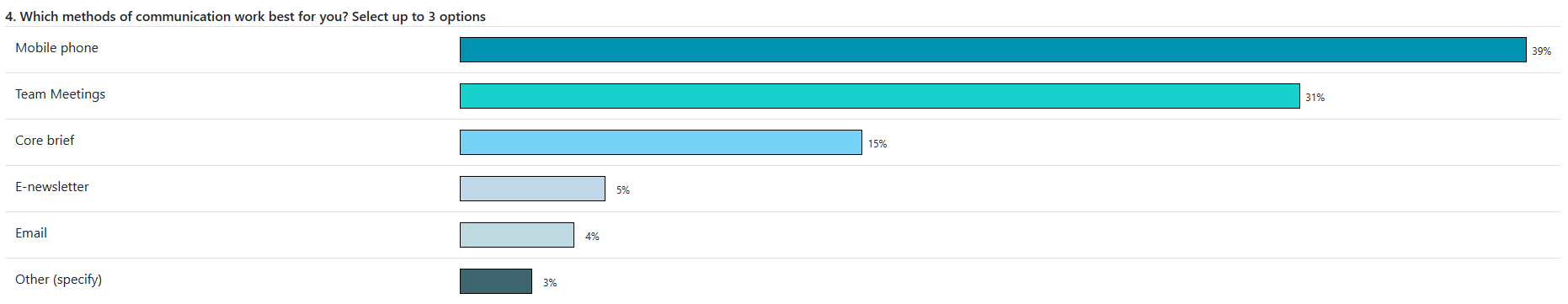

When your question has options that form categories, rather than a scale, or if it’s a question where respondents can choose more than one option, it’s best to show it as a bar chart.

Identify the proportion (%) of respondents who chose each option.

Arrange the data from highest % to lowest %, so you can easily see which options were chosen the most.

Open question responses can seem overwhelming to analyse if you had a lot of respondents in your survey. We recommend that you focus your analysis on a random sample of 100 responses – we’ve found this will generally be representative of your whole dataset.

For each response, label it with the key themes it captures.

Identify the most common themes, and present them in a bar chart.

Use the verbatim data: Share examples of the responses you’ve included under each theme – how your employees feel expressed in their own words is a powerful source of evidence.

When you know the main themes coming through, you can use key words to dig into opinions on crucial topics. Our Reflections dashboard enables clients to search their open question responses for key terms – e.g. Managers, Stress or Pay.

Grouping questions into topics allows you to make headline statements about how positive people are about different parts of their experience. This can give you the helicopter view of the survey that managers and leaders are often looking for.

To produce the topic score (in the top row of the table below), average the responses from the questions in that topic. You can compare the topic to the previous survey by averaging the comparison scores for each question.

When we present questions in a topic, we show them from highest overall positive % to lowest, so you can see strengths and areas for improvement more quickly.

When all your questions are grouped into topics, you can compare performance between topics, and identify key areas to celebrate and key areas to explore. Remember that questions within a topic can score very differently, so it’s still important to drill down to the question-level.

Once you’ve set out the results for the whole organisation, you can begin to share the results filtered for different groups – e.g., departments, sites, directorates or teams

Set out this data in the same way as the organisation-wide results, but add a comparison to the Whole Org: this will help teams understand how they are performing in relation to the organisation as a whole.

Share this data with managers of departments and teams, so they can discuss their own results and plan actions accordingly. Consider also sharing the open question responses with managers, so they can understand the perspectives of staff in their own words.

Now that all the data are organised and you can see the results for different groups, you can start to compare their positivity. This will help you understand how the employee experience varies for people in different parts of the organisation, and in different demographic groups.

We present data like this in a ‘hotspot’ table, where the rows show the topics or questions, the columns show the different groups, and the cells show the colour-coded overall positive %.

This way, you can easily compare groups to the whole organisation, and to each other, and see where they are scoring highly and where might be areas of concern.

You could also look more closely at how different groups answered each question / topic by using the format you’ve already used for the whole organisation results – including the % who chose each option, and how each group has performed compared to last time. This gives you a more detailed view, but can be hard to read for large numbers of groups.

There are many ways in which you can analyse your engagement survey results to gain insight into how your people feel.

We recommend the analysis methods above as a good place to start. These methods will help you start to unpack meaning from your survey responses.

Once you’ve covered the basics, you can then start to go a bit deeper with other methods including geographical analysis, correlation and regressions analyses, and intersectionality.

Have questions on any of the above? Get in touch!

5 Linford Forum

Rockingham Drive

Milton Keynes

MK14 6LY

UK

Company No: 4509427